Time Study Sheets for Eyecare Practices &

KPIs and patient and office flow checklists

Please make sure to check your Spam/Junk folder if you don't see it.

Key Performance Indicators & Checklists

for Vision Claims Processing Turnaround

Please make sure to check your Spam/Junk folder if you don't see it.

AI for Vision Care Management: Where to Begin?

Not sure where to start with AI in your vision care practice? We'll walk you through the basics and help you understand the pros and cons.

Vision care leaders: not sure where to begin with AI? This guide is for you!

When most people use the term "AI" today, they're talking about large language models (LLMs), and more specifically, chatbots like ChatGPT (from OpenAI), Claude (by Anthropic), or Gemini (Google).

Without getting overly technical, today's LLMs are predictive models that are trained on vast datasets, including books, websites, technical manuals, and more. The immense datasets, combined with advances in machine learning, make LLMs extremely powerful at generating text-based content.

As the name suggests, chatbots are LLMs that allow users to submit queries with natural language-based prompts — simply tell the chatbot what you want in plain language. This means you can use chatbots to compose or edit emails, write social media posts, create presentations, research or search the web, summarize information, and more.

The best way to get a feel for LLMs and explore their pros and cons is firsthand. But despite being intuitive to use, the best practices and some of the caveats around LLMs are not immediately obvious.

Even if you've never used a chatbot before, we aim to provide enough information that you can feel comfortable getting started and learning for yourself. Let's go!

Step 1: Choose an LLM Platform

ChatGPT, Claude, and Gemini all have similar basic capabilities and function similarly, so you can choose whichever one you'd like to get started. While you're first learning, we recommend you stick with one platform initially.

Because ChatGPT is currently the most popular LLM in the United States, we'll be providing examples from it. But the same advice will apply across the major platforms.

If you're just getting started, don't worry about signing up for a paid account yet, but do sign up for a free individual account with your email address.

While it's possible to use ChatGPT and other chatbots without creating a free account and logging in, you won't be able to upload files or use other basic features without an account.

For initial experimentation and personal use, consider signing up with your personal email rather than your work domain email address. You can set up a business account later and keep your personal use separate from your work.

Paid plans like ChatGPT Pro, Business, Team, and Enterprise provide perks including access to more advanced models, more credits to use advanced models, and other features, starting at $20/month per user. But you don't need a paid account to get a feel for these tools.

If your organization already uses enterprise services from Microsoft or Google, you may already have access to Microsoft Copilot or Google Gemini Advanced, respectively.

Copilot uses GPT-5 and other models provided by OpenAI, but is trained differently from ChatGPT and allows easier integration with Microsoft365. If you're not getting the results you want with Copilot, you may have better luck with ChatGPT. On the other hand, for some users, Copilot's native integration with the MS suite of enterprise products offers a big advantage.

Privacy and Sensitive or Confidential Information

ChatGPT and other LLMs store your account information and your prompt history. As with search engines and similar tools, it's prudent to avoid sharing highly sensitive information of a personal or business nature with them.

In other words, it's not only best to maintain a private personal ChatGPT account separate from your official business usage, but also to be mindful that information you share may be used for training models and otherwise visible to third parties. (ChatGPT and most other LLMs have an "opt out" option where you can request to exclude your prompts from training data.)

Regarding vision care specifically, should you choose to integrate LLMs into your business workflows, you must also be mindful of HIPAA as it pertains to protected health information (PHI) and related pitfalls.

Before implementing enterprise-level AI into any processes that may touch PHI, ask about obtaining a business associate agreement (BAA) that ensures HIPAA restrictions are upheld. OpenAI, Microsoft, and Google all offer BAAs on their Enterprise plans.

Step 2: Get Oriented and Experiment

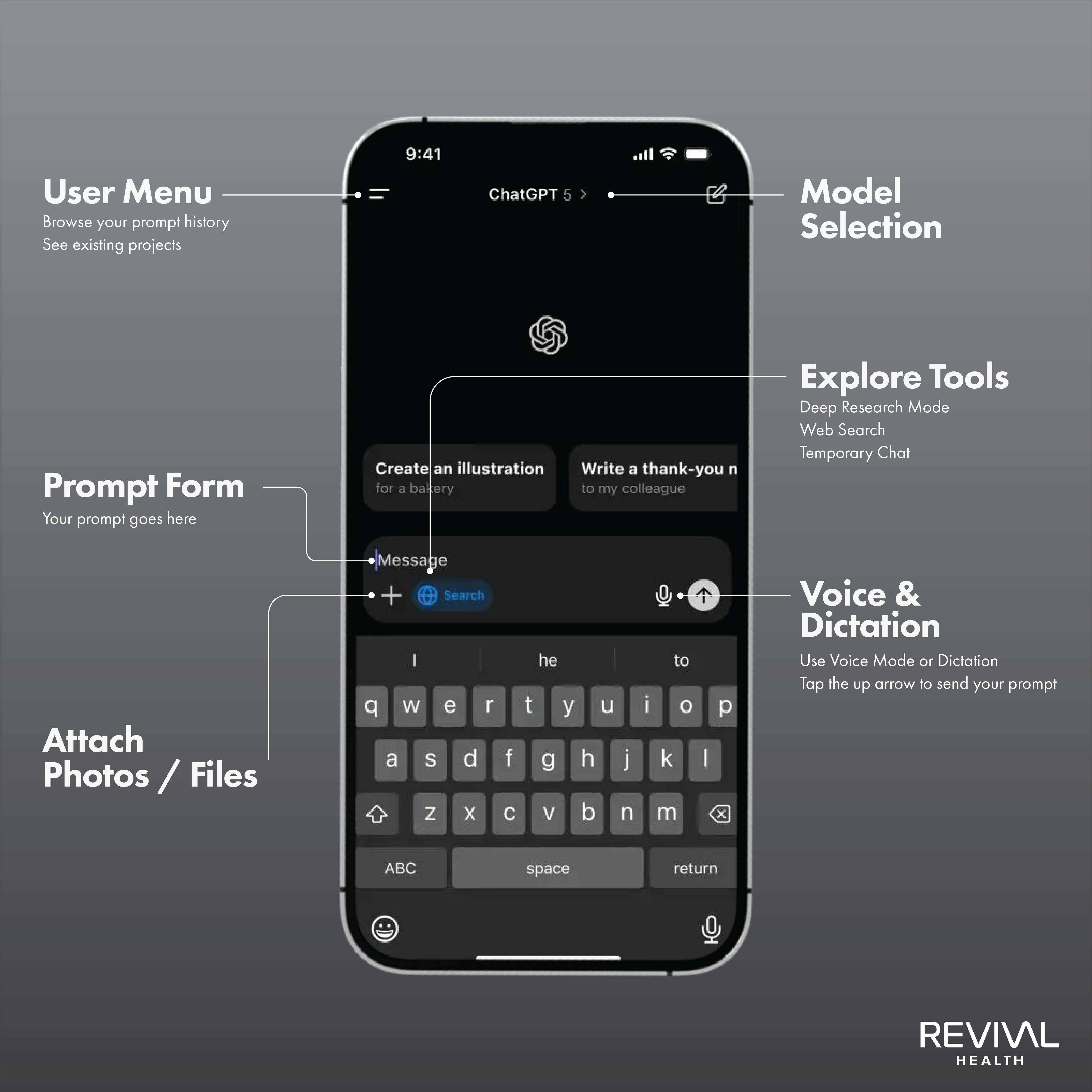

Once you've created your free ChatGPT account and logged in on the website or mobile app, you'll see the following features and buttons:

- User menu with your prompt history and list of any existing projects

- The prompt form (a text box that says "Ask anything")

- Attachment button to add images or other files (a plus sign or "+")

- Dictation and voice mode buttons

- A "submit prompt" button (up arrow) that will appear after you type a prompt

- Deep Research mode, Web Search, "Temporary Chat" option, and other available tools

- Model selection menu (default choice is ChatGPT 5)

Default models like OpenAI's ChatGPT 5 and Google's Gemini 2.5 Flash are efficient, well-rounded models that can handle most text-based prompts.

In contrast, advanced models are more specialized; they also use more resources, so most LLM providers restrict their usage on the free tier.

For vision care professionals, unless you're working with coding, spreadsheets or financials, default LLM models can handle most needs.

Deep Research is another optional tool that can be handy. It takes several minutes or longer to browse the web and synthesize information for your specific query. As with advanced models, users receive a limited number of monthly credits for Deep Research mode, so use it sparingly.

Get Creative with Prompting

Now's the time to experiment with some simple, plain-language prompts to explore the strengths and limitations of the tool.

A prompt is any text or information you provide to receive a response from ChatGPT (or your LLM of choice). These can range from simple questions to highly detailed instructions.

Suggested personal use prompts to try:

- Suggest a better way to structure my day when I feel pulled in too many directions.

- Rewrite this to-do list to group similar tasks and highlight what’s most important.

- Give me 3 quick mindset shifts to help me stop procrastinating on boring tasks.

- Turn this list of notes into a simple action plan I can follow this week.

- Help me summarize this long article so I can remember and apply the key takeaways.

- Recommend a few reflective questions I can ask myself at the end of a workday.

Suggested business use prompts to try:

- Rewrite this message so it sounds clear, confident, and professional.

- Review this short bio and make it sound more polished for a website or newsletter.

- Help me summarize this document so I can explain it to my team.

- Take this rough idea and turn it into a first-draft agenda for a team meeting.

- Make this SOP more readable and easier to follow for new hires.

- Suggest improvements to this follow-up message I sent to a potential client.

As a professional and expert in your field, you'll notice that ChatGPT may not provide a perfect, and maybe not even accurate, response every time. When this happens, it's worth following up with another prompt to provide feedback on what went wrong and try for better results.

Use Projects to Improve Memory

With a logged-in user account, ChatGPT will access some general information from your chat history to improve your prompt results (unless you select the "Temporary Chat" option or turn off memory in "User Settings").

However, it doesn't reliably "learn" in real-time across prompts, and in particular it doesn't tend to transfer specific details you provide across different prompts. This is where the "Projects" feature comes in handy.

Projects are essentially folders where you can store multiple chats, which helps ensure that ChatGPT does transfer information between those chats. For example, you might title a project "Q3 Financial Planning" and add any related chats to that project folder.

Once you create a new Project folder, you can create new chats in that Project as well as drag and drop existing chats into the folder.

Step 3: Combine Prompt Engineering with Your Best Use Cases

Sometimes a simple, one-line prompt gets the job done. But as you may have already discovered, a basic prompt may return generic or even off-topic responses.

In many ways, the usefulness of ChatGPT and other LLMs depends on your ability to create a useful prompt. Designing ultra-specific prompts for optimal results is known as "prompt engineering."

Because LLMs are trained on extremely large datasets, there are virtually infinite responses to any given prompt. But by adding specificity and detail to your prompt, you help the LLM narrow down the possibilities — and deliver a more relevant, helpful response.

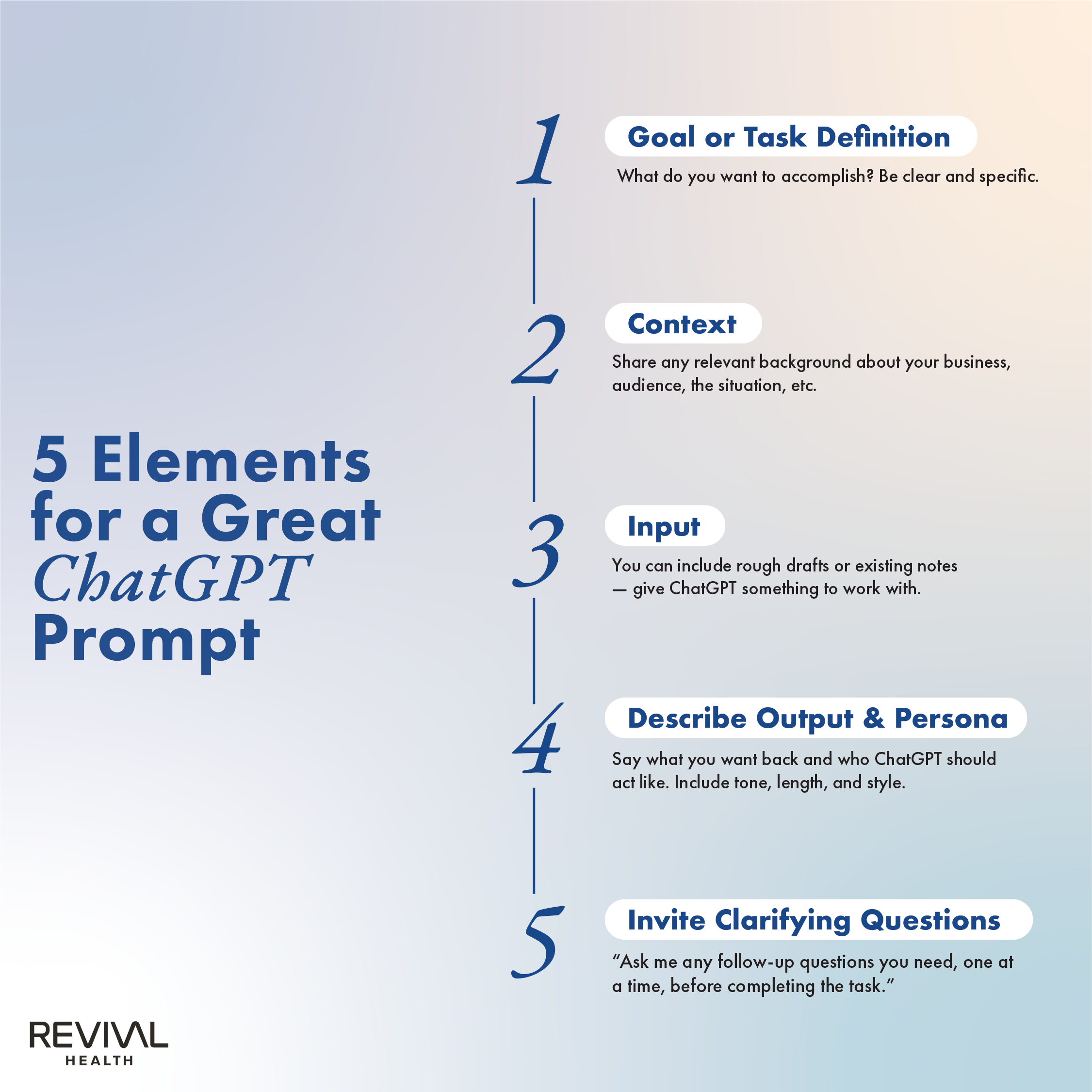

Here are the elements of a good prompt for ChatGPT and other LLMs:

- Goal or Task Definition

Start by stating what you want to accomplish. Be clear and specific.

Example: “I want to rewrite a reminder email to make it friendlier and more patient-centered.” - Context

Give ChatGPT relevant background about your business, your audience, or your situation.

Example: “We’re a multi-location optometry group serving mostly older adults in suburban North Carolina.” - Input (Optional)

Include any draft content, notes, or information you want ChatGPT to work with.

Example: “Here’s the original appointment reminder message we’ve been sending…” - Output or Rules

Describe parameters for what you want back: format, tone, and length all help.

Example: “Return a short, friendly message under 100 words, in a tone that feels caring and reassuring.”

- Examples (Optional)

If you have examples of a good tone, style, or structure, include them for reference.

Example: “Use the tone from the postcard copy below. It’s straightforward and warm.” - Persona (Optional)

You can tell ChatGPT who it should act like. This helps tailor the response to your goals.

Example: “You are an expert in patient communication for healthcare practices.” - Invite Clarifying Questions

Example: “Ask me any follow-up questions you need, one at a time, before completing the task.”

You don't need to include every element, every time, but learning when to add details to your prompt is a key skill for getting the most from ChatGPT and other LLMs.

Assess Use Cases

In the spirit of learning through experience, it's smart to begin with simple, low-risk experiments before formally rolling out LLMs across your organization. In other words, push the limits of LLMs yourself before you incorporate them into company-wide workflows.

This checklist can help you assess which tasks are a good fit for AI and where to begin.

- Ownership: Who owns the task? Can you train them to use AI effectively?

- Task Complexity: Is this a relatively simple task (e.g., writing, summarizing, editing), or does it require deep expertise or judgment?

- Time or Cost Savings: How much time or money will this task save if supported by AI?

- Error Tolerance: Is it okay if the first draft has small errors or needs editing? If not, AI may not be the best fit.

- Cost of Error: Would a mistake here damage patient trust, create compliance issues, or require significant rework?

- Ease of Implementation: Can you use ChatGPT directly, or would this require software integration or time-consuming staff training?

- Review Required? Will a human need to review the output before it’s used? If yes, build that step into your workflow.

Easy use cases with relatively low risk and minimal setup include:

- Writing a friendly reminder email for an upcoming eye exam

- Drafting a month of social media captions from a bullet list

- Creating a first draft of your About page or services copy

- Generating ideas for community outreach events

Examples of medium-difficulty, time-saving tasks that need more extensive review:

- Rewriting policy language for a patient-facing handout

- Summarizing team meeting transcripts into action items

- Drafting job descriptions or SOPs based on notes

- Responding to common patient questions via templated text

And higher-risk tasks, which would require integrations or technical setup and the most monitoring, are tasks like:

- Summarizing sensitive feedback from patient surveys

- Drafting insurance documentation for submission

- Automating responses to inbound patient emails or portal messages

- Custom AI chatbots embedded on your website

We'll continue covering valuable use cases, pitfalls, and integrating LLMs and other AI tools into your organization in this series of articles.

Precautions for New LLM Users

Beware "Hallucinations"

"Hallucinations" are nonsensical, factually incorrect, or otherwise inaccurate responses from LLMs. Importantly, ChatGPT and other LLMs do not detect their own errors, and instead present them as true — often very convincingly.

Estimates of hallucination rates vary, depending on the topic and the benchmark used, from low single digits (1-1.5%) all the way up to approximately 50%.

If you're using LLMs to generate factual information, including in medical, scientific, or legal areas, it's imperative to fact-check the output. Following best practices for prompt engineering can also reduce hallucination rates.

Arguing with ChatGPT Is Usually a Waste of Time

After noticing a hallucination or error, new LLM users will sometimes attempt to correct the LLM in hopes of receiving a better response. Sometimes this works, as you're essentially providing a follow-up prompt.

However, LLMs lack introspection: ChatGPT can't share direct insight into how it arrived at a response or where things went wrong. Instead, you'll usually receive an apology and a promise to do better, but those results aren't guaranteed, either.

If you don't get a satisfactory response after a few follow-up prompts, your time will be better spent fact-checking or correcting the error yourself.

LLMs Aren't a Substitute for Expertise

In part because of hallucinations, any workflow that takes advantage of LLMs must also have a human expert in the loop for review.

In any professional field, a non-expert lacks the foundational knowledge to properly construct a prompt and gauge the quality of LLM outputs. This is especially relevant for medical, legal, and financial work where the consequences are high; but it's also true of any specialized work.

Even for tasks with low error rates or small consequences for errors, a subject matter expert must be involved at some level. The level of involvement and frequency of review will vary depending on the task.

IP Risks are a Two-way Street

If you enter confidential information into an LLM, you no longer have full control over it. While it's possible to mitigate HIPAA disclosure risks with a business associate agreement (BAA), a "better safe than sorry" policy is best when it comes to trade secrets and other highly sensitive info.

But did you know that LLM responses can potentially expose your organization to intellectual property infringement claims?

It's true: while LLM outputs are usually novel, occasionally the outputs will be identical to, or closely derivative of, copyrighted or trademarked material. This can be due to random chance or because the LLM is repeating something from its training data.

Along with fact-checking, it's wise to double-check any significant public-facing outputs for potential IP violations.

Productivity Gains Aren't Guaranteed

The most comprehensive study on LLMs adoption in the labor market to date included 25,000 workers at over 7,000 workplaces.

The results were underwhelming: by using AI chatbots like ChatGPT, employees increased productivity by about 3%, as measured by time savings. And there were no significant effects in earnings or recorded hours in any occupation.

The takeaway isn't that LLMs have no benefit, but rather that adopting LLMs doesn't guarantee results. Using LLMs to make work more efficient or increase productivity requires sophisticated organization-level thinking.

Reliance on LLMs Might Reduce Cognitive Abilities

According to a recent study published by MIT, using ChatGPT instead of doing work the old-fashioned way had some concerning effects on mental function. Compared to LLM users, the non-users had stronger brain connectivity in brain scans, better memory, and performed better on tests.

The study suggests that exclusively using LLMs for tasks might degrade cognitive skills and memory. Where critical skills are concerned, consider keeping AI in an "assistant" role rather than outsourcing entirely.

Don't Sacrifice Trust with AI

Consumer sentiment toward AI is complicated, and some evidence suggests labeling your business as "AI-powered" or similar may actually decrease consumer trust. Instead, market your vision care practice based on its merits — not just on the tools you use.

However, for consumer-facing AI solutions (example: a call center AI agent), it's best to disclose that AI is in play. In these cases, transparency prevents confusion and maintains trust.

Bottom line: Don't assume all your customers are fully comfortable with AI. And if you choose to disclose AI use for ethical, transparency, or other reasons, be very clear as to how you use it and how it benefits your customers.

Related Articles

reading not your thing?

watch on youtube!

Check out the Revival Health Youtube Channel for exclusive content made just for you.